Immutable consistency of monetary data

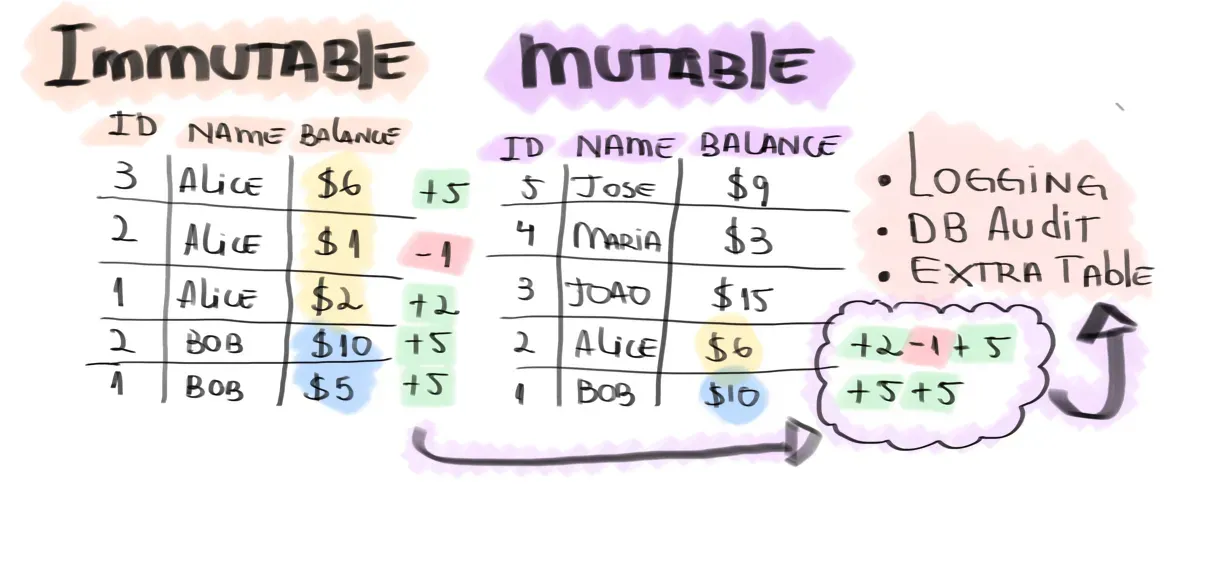

A mutable database increases the complexity of a system. Immutable data models increase scale and simplify the system.

Database updates delete data. Data is lost if we don't have the infrastructure to track changes. We will never know what was there before the update. But there is a better way: immutability. Designing for immutability makes our system simple. We don't need to read logs or database audit events to understand the past. We can look at the data itself.

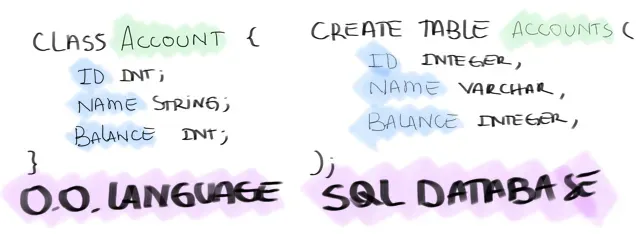

But why we use database updates? It's intuitive. We model a class from OO into a table in a relational database. And make changes in object fields to update the database columns. Mutability in the programming language has its downsides. But let's focus on the database for now.

Data loss

Let's say we are building a bank account app. Where are we storing the balances? As a database column, right? We have the Account class, and it has a Balance field. When a transaction happens, we update the balance. But what if I wanted to know the balance yesterday at 7:39 AM? We lost it. It gets erased with the new balances that came after that time. Our update deleted data.

This design uses database updates for consistency. It makes sure our users won't spend money they don't have. The balance database column represents money. It's whatever cash a user actually has. How can we do this without updates?

Immutable data model

Instead of updating the column when a new value comes in, we create a new database entry. Every transaction will have its own row with the balance at that time. The latest transaction holds the current balance.

We replaced updates, but how can we ensure consistency?

The SQL approach

Relational algebra can help us here. We build a select statement to get the current balance (the latest table row). And we use this statement in our insert. It will ensure that the amount of our new transaction is higher than the current balance.

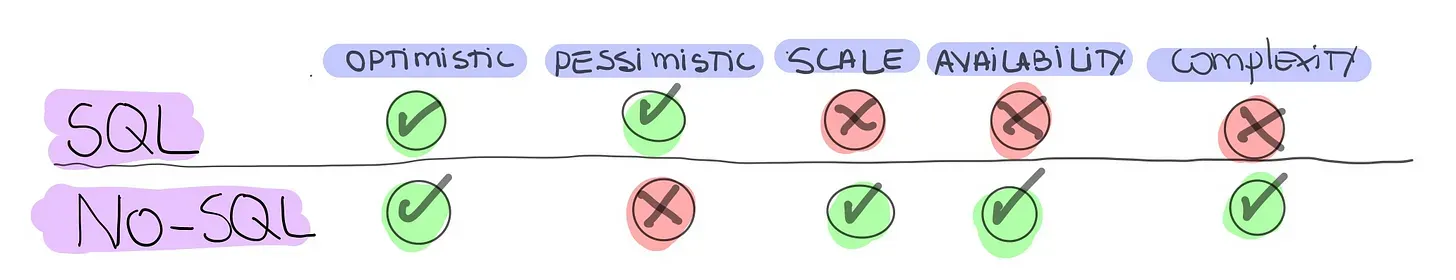

This approach uses pessimistic database locking. It is pretty heavy on the RDBMS engine. When ten transactions happen simultaneously, the database will queue and serialize them. It will get slower as the number of transactions increases. But the application code is simple. The database does most of the concurrency work.

The No-SQL approach

We use the same tactic of storing balance movements as new database entries. And the latest item holding the current balance. For consistency, we derive the key for new transactions documents from the current balance entry document (the latest row in the database). This derived id enables the conditional put. The database ensures only one id gets created, even with concurrent requests in a distributed setting.

No-SQL databases rely on optimistic locking. When concurrency happens, the database returns an error. It's up to the application layer to fix it. This is easier for the database. It scales well. And the application logic dealing with concurrency also supports horizontal scaling. SQL databases can use the same approach. It delivers better performance than pessimistic locking. But nothing compared to a No-SQL that scales horizontally.

Which one should you use?

After defining the database, choose optimistic or pessimistic locking. When performance is not an issue, going with pessimism will make life easier. You will leverage battle-tested code from RDBMS for dealing with concurrency. But if you care about performance and availability is sensitive, No-SQL delivers the best results by using optimistic locking.

Thanks for reading! Subscribe to get the next post in your inbox.